Cookie Settings

We use cookies to provide you with the best possible experience. They also allow us to analyze user behavior in order to constantly improve the website for you. Privacy Policy and Terms of Service

Launch AI agents on secure code sandboxes, refine with evaluations, and ship on AI infrastructure built for enterprise scale

Receive $50 in credits to accelerate your AI software engineering

Runloop's Devboxes are code sandbox development environments that offer

the fastest path to secure, production-ready AI Agents

Utilize our 2x faster vCPUs running on our custom bare-metal hypervisor

Framework agnostic and lightning-fast starts plus ultra fast command execution at 100ms. The only provider with arm64 and x86 support

Tooling for Builders

Reuse tools, files, and keys via Agent, Object, & Secret store for seamless Agentic development

Repo Connections

Automatically infer a build environment for git repositories in any language without the tedious setup

Sandbox templates

Run and customize templates with the latest agent frameworks, pre-built and optimized for Runloop Sandboxes

Git for Agent State

Snapshot and branch from sandbox disk state; develop on sandboxes with SSH, CLI, and IDE connections

Ship your product then iterate quickly & efficiently

Run 10k+ parallel sandboxes

10GB image startup time in <2s

All with leading reliability guarantees

Automatically scale up/down sandbox CPU or Memory based on your agentic needs in realtime

Get comprehensive monitoring, rich logging & first class support with interactive shells and robust UI

Test your agents against existing academic Benchmarks like SWE bench in minutes.

Leverage the best of exisitng scenarios or customize to a propritary use case

Run AI agents against SWE-Bench, R2E-Gym, SWE-Smith, and other standard benchmarks to evaluate performance. Hosted infrastructure, one-click execution

Compare results against published baselines. See how your agent stacks up on tasks the research community uses to measure progress

No setup required. Submit your agent and get scored results on the same test sets everyone else is using

Test on scenarios your AI agent will actually face. Build evaluation sets from production data or create synthetic scenarios for edge cases you need to handle

Convert Devbox states into test scenarios. Use real PRs as training data

Use your own data to evaluate performance or make a training set for fine tuning

Testing

Evaluate your AI agents to measure performance according to your dimensions of success. Define and set your own standards for reliability, problem-solving skills and accuracy

Regression Testing

Catch silent regressions instantly by evaluating your Agents against benchmarks that are a part of your continuous integration pipeline

Fine Tuning

Run Reinforcement Fine Tuning (RFT) and Supervised Fine Tuning (SFT) experiments at scale to unlock new levels of agentic performance

Superior developer experience optimized specifically for agents & orchestrated AI systems

Sandbox

Secure, isolated, micro-VM* environment (Two layers of security, VM + Container)

Connectivity

Work freely with MCP Servers, Tools, SSH Tunnels, Websockets & APIs

Memory

Place, store and work with critical context inside of isolated sandbox environments

Browser + Computer Use

Enable your agents to take control and manage browsers and computers

Suspend & Resume

Minimize costs for bursty agentic workflows. Easily start, stop & resume workflows for continuous operations

SOC2, HIPAA & GDPR

Enterprise-grade security and privacy standards, fully supporting SOC 2, HIPAA, and GDPR

ARM Support

Utilize architecture agnostic components with full support for ARM devices

Full Docker Support

Comprehensive support for Docker Compose, Docker in Docker, and nested Docker files

SOC 2 - Built with compliance in mind, focusing on secure network boundaries, isolated compute, and auditable deployments

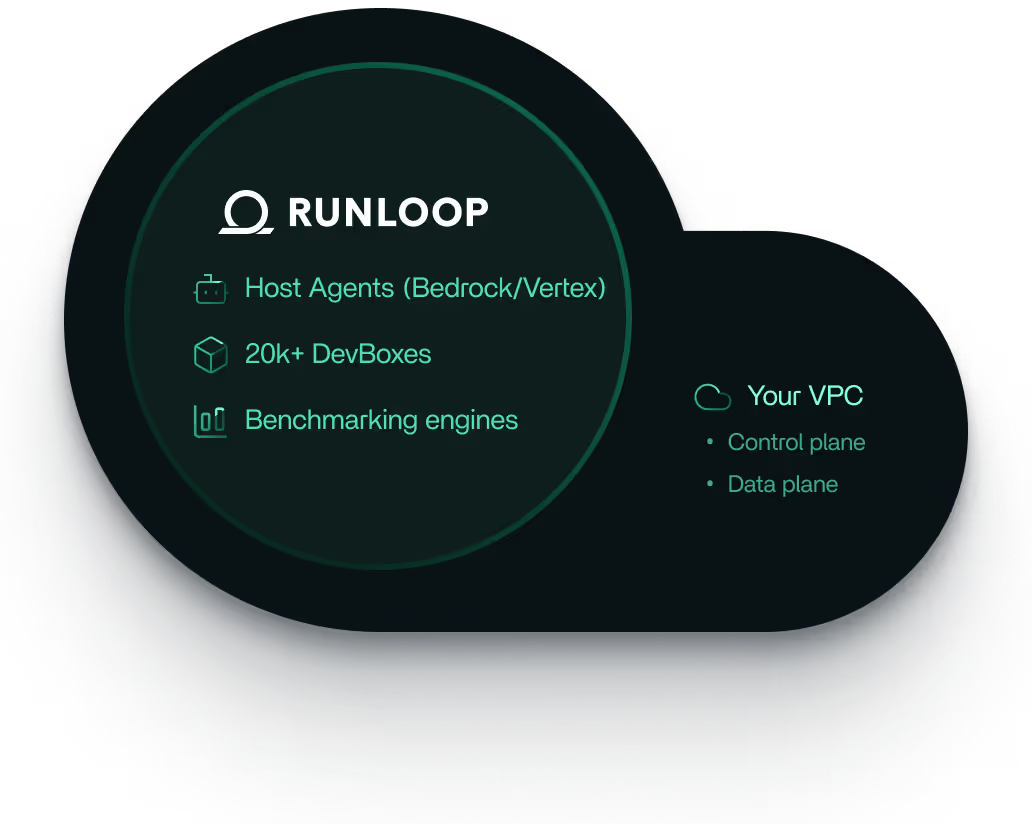

Single Tenant support - Dedicated software instance and infrastructure keeping your data and compute secure

Deploy to Your Cloud – Operate within your existing AWS, GCP, or Azure accounts while maintaining direct ownership of infrastructure and data

Multi-Region – Deploy across regions to optimize latency and availability, and to align with local data residency needs

We’re dedicated to solving the complex challenges of productionizing AI for software engineering at scale.

Integration is straightforward through RunLoop's comprehensive API that maintains existing development workflows while adding powerful sandbox capabilities. The platform provides SDK support and shell tools that can be easily incorporated into current agent architectures. The robust UI makes oversight a easy.

Runloop delivers SOC2-compliant infrastructure with 24/7 support, comprehensive API access, and enterprise security standards including isolated execution environments and optimized resource allocation. The platform maintains operational reliability while enabling organizations to safely experiment with AI-assisted development at scale.

Runloop provides enterprise-grade security through isolated micro-VMs that create strong hardware-level boundaries between tenants, preventing AI-generated code from one agent from affecting another. Each Devbox runs in complete isolation with strict network policies and SOC2-compliant infrastructure.

Benchmarks provide standardized evaluation against industry datasets like SWE-smith, allowing developers to validate agent performance and measure improvements objectively. Runloop's public benchmarks eliminate setup complexity and accelerate developer productivity.

Runloop serves AI-first teams that are building coding agents for various innovative use cases. These include applications like automated code review, test generation, long-context debugging, RL-based code synthesis, and benchmark evaluation (e.g., SWE-bench, Multi-SWE). Our customers span a range of organizations, including startups focused on developing AI developer tools, enterprise innovation teams exploring autonomous agents, and academic labs conducting cutting-edge agentic research.

Traditional serverless and SaaS environments are built for stateless, short-lived tasks. AI agents are long-running, interactive, and stateful—they need a full environment (like a developer laptop), not just a function runner. Runloop’s devboxes provide that environment, with full filesystem access, browser support, snapshots, and isolation. We optimize for fast boot time, suspend/resume, and reliability under bursty, probabilistic workloads.

Runloop builds the infrastructure layer for AI coding agents. Our platform provides enterprise-grade devboxes—secure, cloud-hosted development environments where AI agents can safely build, test, and deploy code. These devboxes handle complex, stateful workflows that traditional SaaS infrastructure can't support.

Yes, Runloop serves both individual developers through generous free tiers and enterprises requiring dedicated resources and guaranteed performance. We offer tiered service levels from cost-effective experimentation to premium enterprise deployments with full compliance standards.

Runloop is usage-based, with pricing tiers based on compute resources, memory, and desired SLA. We support generous free trials with usage credits to test the platform. For enterprise customers, we offer discounts by volume and commitment-based pricing.